[IoT] Aquarium monitor; WebAPI for mobile use

This is post #5 in my blog series about the subject. In the previous post I explained how Azure stream analytics processes all of the incoming messages and places them in the sinks you configure. In my case, messages are now being written to Azure storage blobs, where they reside as CSV files. In this post, we’ll take a look at the other side of things, getting out the data again for display purposes.

First I’d like to point your attention to this blog which does a very good job in explaining what the Azure IoT suite really is; a pre-configured set of services which combined create a powerful solution for handling IoT data in the cloud. If you haven’t already, you should definitely check out the IoT suite remote monitoring sample. A large part of my solution is based on that one.

Having said that, this is the first blog where I will divert a bit from the way the remote monitoring solution works. That solution comes with a website you run in Azure. That website displays some data and allows the user some interaction with devices via cloud-to-device messages. That’s all nice and well, but I want to view my data on my mobile phone, so I’ll be needing an app. That app will use Apache Cordova in order to create code that’s cross-platform compatible. Not necessarily my goal, but it also allows me to use HTML and JavaScript (which I know) instead of native code (which I don’t know). So it’s a win-win.

MVC Web API

Now in order to get the data to my phone, I want to have an API which the phone can call. Of course I could attempt to get the data straight from Azure, but there aren’t any Azure SDK libraries for Cordova available and it’s better to have a single point of access which in the future can also be used to power a HTML / JavaScript powered website. Exactly for those kind of scenario’s, Microsoft introduced Web API projects, which are built on top of the MVC framework. Now if you do not have any Web API experience; I’d recommend doing some tutorials on that first, before continuing reading. I’m going to assume you have some basic knowledge.

In order to start, you’ll need to add a Web API project to your solution. You do so by selecting the “ASP.NET Web Application” project type in Visual Studio and then choose “Web API”

Now before you close this dialog; note the “Change Authentication” button on the right-hand side and click it. This is a nice addition which allows you to configure authentication even before your project has been created.

I’m going to make use of Azure AD Authentication. This will allow the API to be secured using Azure AD. Apart from using user based accounts, this will also allow us to provide access between applications registered in Azure AD, which is exactly what we’ll be doing. In this case, we’ll have two Azure AD applications: the web API itself and the mobile app. By delegating permissions, we can grant the mobile app access to the web API. On the mobile app side, we’ll use our own Microsoft account to log in. More about that in the next blog post.

So we click the button and choose “Work and School Accounts”, which is a bit weird description for saying you want to use Azure AD. Choose the domain you want to use, you can look that up using the Azure portal (at this time, Azure AD is still located in the ‘old’ portal experience). No AD instance yet? Create one first.

As “App ID URL” you can (and should) replace “WebApplication1” with something that makes sense for you. Copy/paste that one somewhere, you’ll need it later on.

Cool. Having your Web API project created, you now already have something that’s easily securable. The only thing you need to do is add the [Authorize] attribute to your controller, like this:

[Authorize] public class LiveController : ApiController

Easy as that, calling this controller will now require authentication via Azure AD.

Getting data from Azure Storage blobs

Next step is to implement the code that actually fetches data from Azure Storage. To start off, make sure you have downloaded and installed the Azure SDK if you haven’t already. You’ll also be using some NuGet packages, of which WindowsAzure.Storage is the most important one. I’m not listing all of them because that list is quite long.

I didn’t go for reinventing the wheel, so I used some of the code in the remote monitoring sample. I’ll make sure the entire repository gets published on GitHub when it’s ready, for now you’ll have to do with some snippets (and download the sample which pretty much has the same code).

Now let’s take a look at some code. I’ll link to the sample code in github instead of copy / pasting it here to prevent this post from getting very long.

- BlobStorageHelper.cs: this class contains some helper methods which assist in communicating with Azure storage to get the stored blobs.

- DeviceTelemetryRepository.cs: this is “where the magic happens” sort of speak. This class contains some methods for fetching data from Azure Storage and converting it into usable objects.

- DeviceTelemetryLogic.cs: is the BusinessLogic class we’ll be using in our controller. It’s not much more that a pass-through for the repository class.

I find it important to understand what exactly happens, so here’s what’s my understanding of this process.

First, a CloudBlobContainer object is created using one of the helper methods. This container is used to store the retrieved blobs.

CloudBlobContainer container =

await BlobStorageHelper.BuildBlobContainerAsync(this._telemetryStoreConnectionString, _telemetryContainerName);

Then container.ListBlobsSegmentedAsync is called to produce a list of available blobs. This list is used as input for BlobStorageHelper.LoadBlobItemsAsync which will order the blobs by segment (remember the storage structure we made using the date?). You could see this as building an index of the available blobs.

Next, the blobs are ordered by their date and the mintime parameter is used to filter the list of blobs, removing the ones that were created before the given date. You’ll want to to this in order to limit the amount of data returned. For the blobs that need to be loaded, the LoadBlobTelemetryModelsAsync method is called which will call blob.DownloadToStreamAsync to download the actual blob from storage.

Now that downloaded blob is one of the CSV files we saw being stored in Azure Storage earlier. So we parse the CSV using another helper method into a StringDictionary object. And then we convert those strings into the typed object we want to retrieve:

foreach (StrDict strdict in strdicts)

{

model = new DeviceTelemetryModel();

if (strdict.TryGetValue("DeviceId", out str))

{

model.DeviceId = str;

}

if (strdict.TryGetValue("Temp1", out str) &&

double.TryParse(

str,

NumberStyles.Float,

CultureInfo.InvariantCulture,

out number))

{

model.Temperature1 = number;

}

}

And so, without too much hassle we created some code which reads data from the blob store and converts it back to typed objects. Cool, right!?

Azure Storage authentication

Now you might have missed one step and you would be right: we didn’t really authenticate with Azure Storage yet. Even though our WebAPI will live inside of the same Azure tenant and AD instance, we still need to provide authentication in order to be able to access the storage.

To do so, you’ll need to retrieve the connectionstring for Azure Storage. To do so, head over to the portal again and open up your storage account. Click the key icon and copy the first connectiongstring.

The code makes use of 4 configurable parameters:

- telemetryContainerName; the container name you specified when creating the Azure Storage container. Find it by clicking Blobs in your storage account. In my case this is devicetelemetry.

- telemetryDataPrefix: the prefix is the first folderlevel within your contaner. This too is called devicetelemetry in my case.

- telemetryStoreConnectionString: the above mentioned connectionstring goes here.

- telemetrySummaryPrefix: this would be the folder name where the summary data is stored (should you want to use that). In my case it’s simply devicetelemetry-summary.

Combining these things together in the Web API controller

With some added plumbing, you should now have a class which is capable of getting data out of the Azure storage blobs and converting it into usable typed data objects. Implementing our WebAPI controller is now very simple:

public class LiveController : ApiController

{

private readonly IDeviceTelemetryLogic _deviceTelemetryLogic;

public LiveController(IDeviceTelemetryLogic deviceTelemetryLogic)

{

_deviceTelemetryLogic = deviceTelemetryLogic;

}

[Route("")]

public async Task<IHttpActionResult> Get()

{

string deviceId = "";

DateTime minTime = DateTime.MinValue;

var deviceData = await _deviceTelemetryLogic.LoadLatestDeviceTelemetryAsync(deviceId, minTime);

return Ok(deviceData);

}

}

For the sample, I omitted the deviceId and minTime parameters, leaving those blank and at the DateTime.MinValue to just get everything. In a later stage you’ll probably want to pass in the deviceId and date setting.

Let’s talk about the plumbing some more…

Getting ready for mobile connections; Owin

Essentially you’re done now. Publish the Web API project to Azure and you’ll have a secured service which offers data coming from Azure Blob Storage. The problem is that our mobile app will not be able to directly authenticate to the service. Instead, we’ll be using what called a Bearer token to tell the service that the request came from an authenticated source. Essentially, this will be app-to-app authentication, since our mobile app will also be registered as an Azure AD application. I’ll discuss setting that up in the next blog, but we can already prepare some plumbing in the Web API as well.

In order to provide this type of authentication, we’ll add some more NuGet packages to the WebAPI project. Amongst with some dependencies, at the moment I’m using:

- Microsoft.Owin

- Microsoft.Owin.Host.SystemWeb

- Microsoft.Owin.Security.OAuth

- Microsoft.Owin.Security.Cookies

- Microsoft.Owin.Security.MicrosoftAccount

- Microsoft.Owin.Security.ActiveDirectory

- Microsoft.Owin.Security.WSFederation

These Owin packages implement the “Open web interface for .NET” and provide an easy way to handle different ways of authentication. As you can see by the packages, there’s Active Directory, Microsoft Acount and OAuth support available.

In the remote monitoring sample, configuration of authentication is done in the Startup.Auth.cs class. Probably the most important part of code in there is this:

// Fallback authentication method to allow "Authorization: Bearer <token>" in the header for WebAPI calls

app.UseWindowsAzureActiveDirectoryBearerAuthentication(

new WindowsAzureActiveDirectoryBearerAuthenticationOptions

{

Tenant = aadTenant,

TokenValidationParameters = new TokenValidationParameters

{

ValidAudience = aadAudience,

RoleClaimType = "http://schemas.microsoft.com/identity/claims/scope" // Used to unwrap token roles and provide them to [Authorize(Roles="")] attributes

},

});

What this does is tell the application to “Use Windows Azure Active Directory Bearer Authentication” (see how that’s literally the name of the method…). You need to pass in two configuration options:

- The Azure tenant in which your working. That’s the “tenant.onmicrosoft.com” address which you can see when you click your name in the top right part of the (new) portal.

- For the audience, we configure the App ID URL you have copied and saved somewhere in the beginning of this post whilst creating your Web API project.

What does this do?

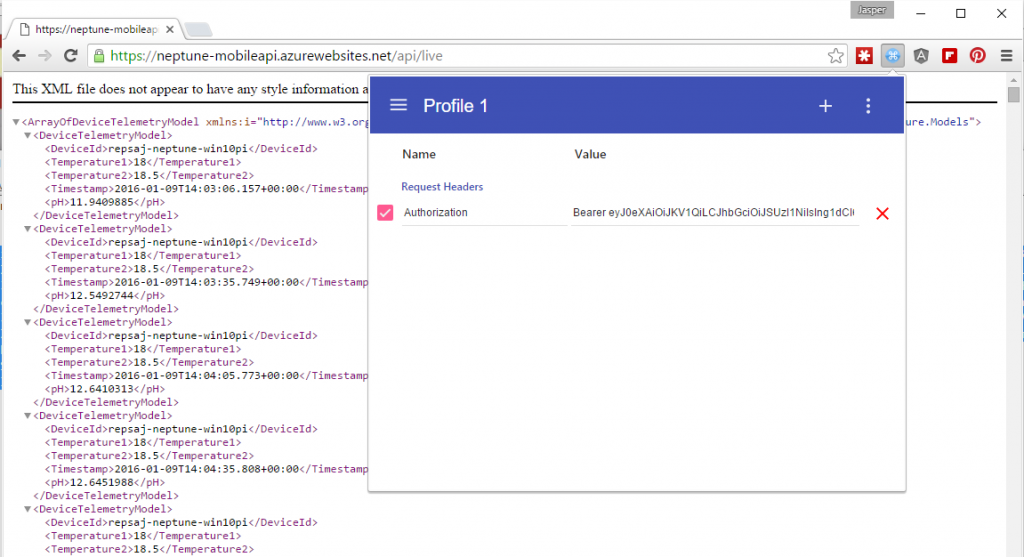

By setting the above configuration, you’re telling your WebAPI to allow calls that have a Bearer token in the headers. A bearer token is an encoded string with authentication information, so we should use it only over secured connection like HTTPS (SSL). If I skip ahead to a working sample, you’ll see that just requesting a URL via the browser will result in an access denied page:

But using the “ModHeader” extension for chrome we can manually add a bearer token inside the “Authorization” header and voila:

See how there’s now data being returned because our WebAPI interpreted the Bearer token and found it to be a valid one. Your next question is probably: cool, but where do I get that token? I’ll explain that in the next blog post where we are going to query the API for data from a mobile app.

Inversion of control; Autofac

This next framework I was not familiar with myself. It’s called Autofac and it’s a inversion of control container framework for .NET 4.5. In short, it allows you to do dependency injection in order to avoid all kinds of dependencies throughout your code. I’m a big fan of dependency injection and since Autofac is used in the remote monitoring sample, I decided to adopt it. The nice thing is that Autofac features integration with MVC, WebAPI and Owin!

Again, Autofac is added to your project using some NuGet packages:

- Autofac

- Autofac.MVC5

- Autofac.Owin

- Autofac.WebApi2

- Autofac.WebApi2.Owin

Wiring up Autofac is done in Startup.Autofac.cs. The ConfigureAutoFac method registers some stuff so Autofac can do it’s work. This is all pretty well documented in the documentation and tutorials found here.

public void ConfigureAutofac(IAppBuilder app)

{

var builder = new ContainerBuilder();

// register the class that sets up bindings between interfaces and implementation

builder.RegisterModule(new WebAutofacModule());

// register configuration provider

builder.RegisterType<ConfigurationProvider>().As<IConfigurationProvider>();

// register Autofac w/ the MVC application

builder.RegisterControllers(typeof(MvcApplication).Assembly);

// Register the WebAPI controllers.

builder.RegisterApiControllers(Assembly.GetExecutingAssembly());

var container = builder.Build();

// Setup Autofac dependency resolver for MVC

DependencyResolver.SetResolver(new AutofacDependencyResolver(container));

// Setup Autofac dependency resolver for WebAPI

Startup.HttpConfiguration.DependencyResolver = new AutofacWebApiDependencyResolver(container);

// 1. Register the Autofac middleware

// 2. Register Autofac Web API middleware,

// 3. Register the standard Web API middleware (this call is made in the Startup.WebApi.cs)

app.UseAutofacMiddleware(container);

app.UseAutofacWebApi(Startup.HttpConfiguration);

}

The WebAutofacModule found in the same file takes care of registering all of the types we’re going to use (inject). Don’t be intimidated by the large number of classes in the sample file, use only the ones you need which in my case for a very simple start were the DeviceTelemetryRepository and DeviceTelemetryLogic classes:

builder.RegisterType<DeviceTelemetryLogic>().As<IDeviceTelemetryLogic>(); builder.RegisterType<DeviceTelemetryRepository>().As<IDeviceTelemetryRepository>();

Conclusion

Once you’ve implemented all of the above, you should now have a simple WebAPI service deployed to Azure, capable of serving telemetry data from Azure blob storage. Now of course this should be extended with more functionality later on, but let’s keep it simple for now in order to get going. Next up is the mobile app which will query this API and display some data on screen. Stay tuned for another post!

March 14, 2016 at 9:33 am |

[…] [IoT] Aquarium monitor; WebAPI for mobile use […]

March 14, 2016 at 10:04 am |

[…] [IoT] Aquarium monitor; WebAPI for mobile use […]