[IoT] Replacing webjob with Azure function

In this blog post I will not be adding any new functionality to my aquarium monitor project. Instead, I’m going to replace already existing functionality. Not because that’s needed, but just because I can 😉 We’ll be looking at replacing our webjob instance using something new which is called Azure Functions.

If you didn’t read it or do not recall, check out the post I wrote on how I implemented notifications. I made use of a webjob to monitor the event hub for incoming notifications (generated by Azure Stream Analytics) and sending those to the notification hub. A few lines of code also constructed the message to send.

What are Azure functions?

So what exactly is an Azure function? Well basically the name covers the load this time; it really is just a function you can deploy onto Azure. So instead of deploying a package with lots of code inside, an Azure function is just one function that is triggered somehow. And as with a normal function you’d write in your favorite programming language, it comes with input and output.

Next to input and output, an Azure function also needs a way of triggering it, since you probably want your function to actually do something at a certain point in time. Triggering can be done in several different ways:

- Storage blob; the function is triggered by a blob being written in a specific Azure Storage account.

- Event hub: the one I will be using; the function is triggered by new event in an Azure event hub instance.

- Webhook: using webhooks you can trigger your application from any service that supports webhooks.

- GitHub Webhook: specific to GitHub, this trigger can help you act on events originating from GitHub.

- HttpTrigger: simply triggered by receiving a HTTP request.

- QueueTrigger: similar to the event hub trigger, this one triggers based on new items within an Azure queue. You can see how this can be useful for all kinds of processing scenario’s.

For input and output, there are also multiple options which I won’t all discuss here, I think you probably get where this is going with all of the major Azure services like blobs, DocumentDB, service bus, etc. being supported.

Why an Azure function?

This was the question I had when I came across this. We already have WebAPI and WebJobs to take care of these kinds of things, right? And granted: you can achieve the same results using those technologies too. But simplicity is key here. Functions force you to have a clean and simple application architecture. They’re also simple to write, maintain and host. And since they run an Azure and you do not need to worry about infrastructure, they scale in a very simple way.

Let’s take a closer look at our scenario. I’ve previously used a web job to monitor my event hub instance for incoming messages. These messages were processed by the job and then sent to the notification hub. For this I had to create a web job, connect it to the event hub with a message factory instance, provide some configuration to store these connection parameters, connect to the notification hub, again provide connection details, etc. etc. That’s quite some plumbing required for what ended in a few lines of code taking in a message and sending one out at the other end. It’s not hard, but still.

Now with an Azure function, everything except for the function body can be clicked and configured within the Azure portal itself, there’s no need for plumbing code any more, it’s just the bit I actually care about that I need to write.

Creating an Azure Function

To get started with this, you head over to functions.azure.com where, once logged in, you’ll have the ability to create a function app. You can also use the “New” button in Azure and search for “Function App” which is in the “Web + Mobile” category. A function app is the container for your functions, so don’t name it like the function you want to create (made that mistake myself).

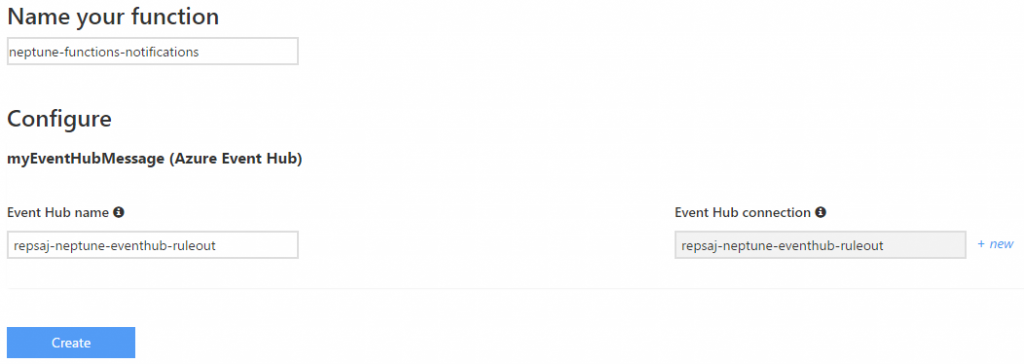

Once your container is created, you’ll be in the familiar environment of the Azure portal where you can start creating actual functions. I started by creating a EventHubTrigger function in C#. At this time, you write your functions code in either C# or Node.js.

Important: when you create your function, Azure will ask in which data center location you want to create it. Ensure that you choose the one in which you have also created your event hub and storage account. Functions cannot use resources located in other data centers. Not sure whether this might change in the future.

The input you need to give depends on the type of function you selected, so in this case it’s asking me to configure an event hub to use.

Note: when I copied the connection string for my event hub instance, it included an “EntityPath” parameter. Azure Functions will complain about that, telling you to remove it. So before pasting in your connecting string, remove that bit including the semicolon (;) before it.

Configuring the output

Your newly created function will come with a parameter that contains the event hub message in string format, which means we do not have to configure an additional input. For the output, we’ll need to configure the notification hub instance, which is done by simply adding an ouput, selecting the notification hub type and then clicking the instance name (there’s the simplicity I mentioned).

Editing the function

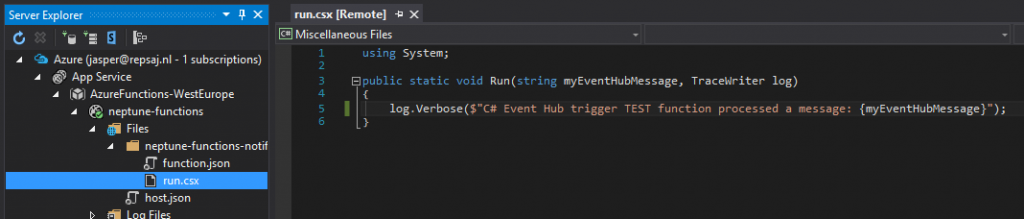

For this moment, it seems that editing the body of the function is done in the browser, similar to what we see with ASA jobs for instance. I do miss a “open in Visual Studio” button here, which I was kind of expecting to be there. Visual Studio also doesn’t have a template for these functions. You can, however, use the server explorer in VS to open up your job and then open the “run.csx” file. This will have the same code in it, which you can simply edit and save back to Azure. Works just fine and gives you the full power of VS. Whether you need that is a different case, as you should probably try and keep the body of these functions nice and simple.

Another option is to enable Visual Studio Online. You can do so by opening the “App Service Settings” pane (found on the “Function app settings” page) and clicking “Tools”. Then select “Visual Studio Online” and enable the service. You can then click “Go” to open up the solution in VS Online, the contents will be the same as in Visual Studio itself with the difference that you cannot add new files in the client, where you can in Online. We’ll actually need this later on, so enable VS Online for your function app!

Binding data

The function will come with a functions.json file, when you open this one you’ll see something like:

{

"bindings": [

{

"path": "repsaj-neptune-eventhub-ruleout",

"connection": "repsaj-neptune-eventhub-ruleout",

"type": "eventHubTrigger",

"name": "myEventHubMessage",

"direction": "in"

},

{

"name": "notification",

"type": "notificationHub",

"hubName": "repsaj-neptune-notifications",

"connection": "repsaj-neptune-notifications_NOTIFICATIONHUB",

"tagExpression": "",

"direction": "out"

}

],

"disabled": false

}

So this is the place where the bindings are configured, in this case one input (event hub) and one output (notification hub).

The input parameter in my case is just a string, nice and simple. But how do we pass any output to the notification hub? You’ll need to check the developer reference to find out how the bindings are done when data is passed in the form of JSON objects (which do not have any structure by default). So you need to structure these in the right way so they can be mapped to the corresponding output of your function. Each output requires a different input.

Ok, time for some code!

using System;

using Newtonsoft.Json;

using Newtonsoft.Json.Linq;

using Microsoft.Azure.NotificationHubs;

public static void Run(string myEventHubMessage, out Notification notification, TraceWriter log)

{

log.Info($"C# Event Hub trigger function processed a message: {myEventHubMessage}");

string message = GetMessage(myEventHubMessage);

log.Info($"Message: {message}");

notification = new GcmNotification("{\"data\":{\"message\":\""+message+"\"}}");

}

private static string GetMessage(string input)

{

dynamic eventData = ((JArray)JsonConvert.DeserializeObject(input))[0];

if (eventData.readingtype == "leakage")

return String.Format("Leakage detected! Sensor {0} has detected a possible leak.", eventData.reading);

else

return String.Format("Sensor {0} is reading {1:0.0}, threshold is {2:0.0}.", eventData.readingtype, eventData.reading, eventData.threshold);

}

Wait. What’s that? Two functions within one Azure function? Euhm yeah, so this might be the only time when the naming does get a bit confusing after all. You can define multiple functions within your code if you want to split up things a bit. Note that I could have written everything in a single function and in this case that wouldn’t have been that bad. But for readability it’s nicer to split things up imho.

A few things to note:

- The output of our function is defined as an out parameter, not as a return type. That’s most likely due to the fact that you can specify multiple out parameters (mapping to multiple outputs), but only one return type. You need to assign a value to those out parameters you’re using.

- See how I’m converting the incoming string to JArray and taking the first object from that array? This is actually analog to the code from the webjob we created earlier!

NuGet packages

As you can see in the code sample, I’m using the Newtonsoft and Azure notification libraries inside of my code. But how does the function know these packages? Simple: NuGet! Open up your Visual Studio Online instance and within the function folder, add a new file called project.json. Inside of this project, you can specify new packages to load as follows:

{

"frameworks": {

"net46": {

"dependencies": {

"Microsoft.Azure.NotificationHubs": "1.0.4",

"Newtonsoft.Json": "8.0.3"

}

}

}

}

Nice and simple. One note of caution: great power comes with great responsibility. Since you can push your own NuGet packages, you *could* create huge libraries which do all kinds of processing and call those from your function. But just because you could doens’t mean you should; I would advise to keep functions as simple as possible. When you need to do more complex stuff, use multiple functions or use your function to call an API which does the actual processing. In an architecture I would regard functions as glue to integrate things, not as the container for business logic.

More detail on adding NuGet packages is found here.

Testing

Once you have your code implemented, the function page will give you the option to provide a payload to test the function. It also outputs logging data so you can monitor what’s going on. And because your function is probably not too complicated, debugging usually isn’t either. You can also attach your local Visual Studio debugger to the online instance of your function and debug that way, awesome!

Serverless & stateless

Webjobs are being advertised as “serverless”. This basically means that Azure will run it “somewhere”, but doesn’t reserve any dedicated hardware for it. Of course there is hardware involved somewhere, but the idea is that this is handled by the fabric layer. You cannot and should not care about this.

They’re also stateless, so a function doesn’t know about states or sessions, each request is a new one within it’s own confined context. This is the one bit where my web job gave me a little more options. Because I didn’t want to spam users with a lot of notifications, I had a timer running in my web job to keep track of when the last notification was sent. I can’t do the same in my function, but you can write the timestamp to a blob in azure storage which you use both as input (check datetime) and output (write new datetime).

Adding a timer

That said, I had one problem left. You might remember that I added a timer to my webjob to make sure it didn’t send out a sh*tload of notifications as soon as sensor readings would be running outside of bounds. Hmmm, so how am I going to prevent the function from doing that then?

The answer is actually quite simple. I defined an extra pair of input and output, both of the Azure Blob storage type. This allows me to read and write data from a blob file stored in Azure. Within that blob file I simply save the datetime of the last processed event. That’s then fed to my function as input again and I can now easily compare whether more than 60 minutes have passed in the meantime.

Here’s the finished function code:

using System;

using Newtonsoft.Json;

using Newtonsoft.Json.Linq;

using Microsoft.Azure.NotificationHubs;

public static void Run(string myEventHubMessage, string inputBlob, out Notification notification, out string outputBlob, TraceWriter log)

{

log.Info($"C# Event Hub trigger function processed a message: {myEventHubMessage}");

if (String.IsNullOrEmpty(inputBlob))

inputBlob = DateTime.MinValue.ToString();

DateTime lastEvent = DateTime.Parse(inputBlob);

TimeSpan duration = DateTime.Now - lastEvent;

if (duration.TotalMinutes >= 60) {

string message = GetMessage(myEventHubMessage);

log.Info($"Sending notification message: {message}");

notification = new GcmNotification("{\"data\":{\"message\":\""+message+"\"}}");

outputBlob = DateTime.Now.ToString();

}

else {

log.Info($"Not sending notification message because of timer ({(int)duration.TotalMinutes} minutes ago).");

notification = null;

outputBlob = inputBlob;

}

}

private static IDictionary<string, string> GetTemplateProperties(string message)

{

Dictionary<string, string> templateProperties = new Dictionary<string, string>();

templateProperties["message"] = message;

return templateProperties;

}

private static string GetMessage(string input)

{

dynamic eventData = ((JArray)JsonConvert.DeserializeObject(input))[0];

if (eventData.readingtype == "leakage")

return String.Format("[FUNCTION] Leakage detected! Sensor {0} has detected a possible leak.", eventData.reading);

else

return String.Format("[FUNCTION] Sensor {0} is reading {1:0.0}, threshold is {2:0.0}.", eventData.readingtype, eventData.reading, eventData.threshold);

}

Conclusion

Azure functions are a neat solution when you want to force yourself into a more micro service-like architecture. This means that your application is split up into many different, small, parts which all have their own responsibility. But even if your not following those architecture principles, you can still make use of Azure functions. For instance to handle a submitted form (check out this example) or as a periodic process performing some processing or clean up (SharePoint timer jobs, anyone)?

Another great benefit I didn’t yet mention is that a function is continuously available, but you only pay for the time it actually runs. For the time being these functions are free though it’s safe to assume there will be some billing involved in the (near) future. But even then, it’ll most likely be much cheaper compared to a web job which needs to run in “always on” mode to prevent it from being killed after 15 minutes or so. That web job will cost you no matter whether it actually does anything, the function will not.

May 13, 2016 at 4:53 pm |

[…] [IoT] Replacing webjob with Azure function […]

July 11, 2016 at 10:47 am |

This has been really helpful. Thanks!

July 11, 2016 at 11:31 am |

Thanks!

July 12, 2016 at 10:42 am |

I was just reading through some of the other parts since i’m trying a similar thing.

This is all really helpful so thanks again! Just wondering about two things, could you tell me why you chose not to do those?

1) When using Azure Functions, why not use a blob trigger since you’re also outputting everything to a blob storage (from ASA). This way you could remove the event hub and save costs

2) Why not use windowing in ASA to only send alert events every 30 minutes or something, instead of building this logic in the Function / webJob?

Not judging or anything! Just wondering and trying to figure out what is the best way to do things for myself 🙂

August 25, 2016 at 3:12 pm |

Great blog post! Btw, you don’t have to include Newtonsoft in the nuget dependencies. It’s one of the packages that MS includes by default. You just have to add `#r “Newtonsoft.Json”` before your `using` lines at the top of the function.

August 25, 2016 at 3:42 pm |

Thanks Stephen, also for the useful addition. Did not know that, right of them to have it loaded by default these days 🙂